Argonne’s Exascale Computing Pushes the Limits of 3D Neuronal Mapping

October 21, 2024 — The structure of the human brain is extremely complex and poorly understood. Its 80 billion neurons, each connected to 10,000 other neurons, support functions ranging from supporting vital life processes to to define who we are as individuals. By obtaining high-resolution images of brain cells, researchers can use computer vision and machine learning techniques to determine brain structure and function at the sub-cellular level. .

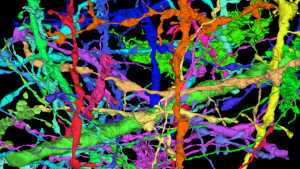

Known as connectomics, the goal of this research is to understand how individual neurons are connected to function as a whole. Neuroscientists and computer scientists work together to create connections, detailed maps of the brain made neuron by neuron.

“What we’re trying to do is rebuild the structure and connectivity of the brain,” said Thomas Uram, a data scientist with the Argonne Leadership Computing Facility (ALCF).

The development of the connectomics project is helping researchers working in other fields as well.

“The work done to prepare this project for higher standards will help users of the system in a broader way. For example, electron microscopy algorithms under development promise a wider application to x data -ray, especially with improve in the Argonne‘s Advanced Photon Source,” said Uram, who is working on a major communications project using ALCF equipment. ALCF and the Advanced Photon Source are the US Department of Energy’s (DOE) Office of Science Users at DOE’s Argonne National Laboratory.

General Technical Requirements

Central to the coordination effort of the Uram communications project—led by Argonne computer scientist Nicola Ferrier—is Aurora, Intel-Hewlett Packard Enterprise’s new ALCF system. This project was funded under ALCF’s Aurora Early Science Program (ESP) to develop codes for system architecture and sizing.

“Any attempt to build a significant brain structure – such a large amount of data as it is – requires a lot of computing time, so our research is really based on exascale computing,” Uram explained.

The work leverages imaging techniques (especially using electron microscopy), supercomputing, and artificial intelligence (AI) to improve our understanding of how the brain’s neurons are organized and interconnected.

“Using this technology at this scale is possible today because of the power of ALCF’s computing resources,” Uram said. “Improved methods for studying neural structure have helped ensure that computing will scale from the first cubic millimeters of brain tissue, to the cubic centimeter of a mouse brain beyond that, and to large-scale models of human brain cells in the future. As thinking technology advances, computing will need to achieve higher performance in post-exascale machines.”

“Connectomics emphasizes many frontiers: high-tech electron microscopy operating at nanometer resolution; tens of thousands of images, each with tens of gigapixels; precision sufficient to capture the smallest details of synaptic; computer vision methods to connect coherent structures across large images; and deep learning networks capable of tracking narrow axons and dendrites over large areas,” he continued. first, he gave an idea of the scope of the project.

Many tools contribute to the 3D reconstruction of nerves; The most popular of them are image matching and segmentation.

“The data we’re working with now is human brain data,” Uram said. “The information comes from a collaborative effort with researchers at Harvard University, who are rapidly pioneering the comparative electron microscope.” Tissue samples need to be prepared extensively for connectivity analysis.

Alignment and separation

“An integrated part of our work comes after brain cells are thinly sliced and imaged with an electron microscope; each slice is divided into tiles,” said Uram. “After we get a lot of tiles from the electron microscope, we compare them so that their parts match, and we do that across all the tiles in that part. and sewing.”

Before the 3D structure of the neurons can be reconstructed, the 2D details of the objects must be correlated between neighboring images in the image stack. Image inconsistencies can occur when tissue samples are cut into thin sections, or during scanning with an electron microscope. The Finite-Element Assisted Brain Assembly System (FEABAS) application—developed by colleagues at Harvard—uses template- and feature-matching techniques for coarse and fine alignment, using a network of springs to generate accurate linear transformations. and non-linear spatial, coordinate 2D image. content between sections.

“Once we have built a complete section, we check the neighboring sections to make sure they are compatible and connect them if necessary,” said Uram. “We have to connect them in a very precise way: the lateral resolution from the microscope is four nanometers. Fetching the fine structure correctly in this data depends very much on the quality of the arrangement of the neuron content between the images of the neighborhood.”

Tracking Neurons with Machine Learning

After stitching and matching is complete, communications researchers use various AI techniques to speed up data processing and analysis.

Uram explained: “We use machine learning to find and track objects – neurons, in fact – in a collection of images that we’ve created. “Without machine learning, a human he has to sit down and follow the nerves, which greatly limits the amount of data we are able to capture.”

Analyzing a stack of stitched and matched images, a convolutional neural network model trained to identify the nerve body and membrane reconstructs the 3D structures of the nerve. The Flood Filling Network the code, developed at Google and adapted to work in ALCF systems, monitors individual neurons at long distances, enabling analysis at the synaptic level.

Deep learning models for network reconstruction were trained on Aurora using approximately 512 nodes, showing a 40 percent performance increase over the entire project lifetime.

“Working with Intel, we’ve been working to run our model through various Aurora systems and other Argonne systems,” Uram said. “That work has been very fruitful, and Intel has been very helpful in learning how to run the model effectively, train the model and use the trained model for classification.”

Once the team has generated a trained model, it uses it to generate a large-scale subset of brain cells. The size is larger than the size of the training data, making segmentation a task where Aurora’s computing power is most needed – physically it can be on the order of cubic millimeters, which with the desired resolution of thinking that represents almost. petabytes of data.

Refinements with these models were performed in Aurora using approximately 1,024 nodes (with multiple reference modes for each image processing layer) to produce a teravoxel layer. of data. From these to a full machine, the researchers expect to soon be able to split a petavoxel dataset in a few days at Aurora.

Source: Nils Heinonen, ALCF

#Argonnes #Exascale #Computing #Pushes #Limits #Neuronal #Mapping